Hey folks welcome to pjoshi15.com! SDXL Tubo model is one of the best image generation diffusion models right now, as of Jan 2024, and its USP is the speed of image generation.

I have already covered image generation using SDXL Turbo from scratch, i.e., you load the model, enter a text prompt to describe your image and the model generates that image for you.

In this tutorial, we will talk about Image-to-Image or img2img generation. So, in Image-to-Image, an image is also provided to the AI model as input in addition to the text prompt.

Then the AI model modifies and changes the image according to the input prompt and a few other parameters. Our AI model is going to be SDXL Turbo, however, we can use other models as well such as SDXL 1.0, Stable Diffusion v1.5, and many others.

Open Colab Notebook and install libraries

Let’s install Diffusers and Accelerate libraries. Make sure GPU is enabled in your notebook.

!pip install accelerate

!pip install diffusers==0.23.0

import torch

from diffusers import StableDiffusionXLImg2ImgPipeline

from diffusers.utils import load_image, make_image_grid

Import SDXL Turbo model

# Create img2img pipeline

pipe = StableDiffusionXLImg2ImgPipeline.from_pretrained("stabilityai/sdxl-turbo", torch_dtype=torch.float16, variant="fp16")

# transfer pipeline to GPU

pipe = pipe.to("cuda")

Load reference image

Next, we will load a reference image that will be used as the base image for image-2-image generation.

# load image

ref_image = load_image("face.webp")

# display image

ref_image

Generate images using SDXL Turbo

Now we will use Image-to-Image SDXL Turbo pipeline to generate a few images. The most important benefit of the SDXL Turbo model is that it needs only 4-9 inference steps to generate a good-quality image. So, it helps us in performing quick experiments.

prompt = "a 50 year old man"

results = pipe(

prompt=prompt,

height=768,

width=512,

image=ref_image,

num_inference_steps=7,

guidance_scale=2,

strength=0.5,

generator=torch.manual_seed(40183)

)

# display input image and generated image

make_image_grid([ref_image, results.images[0]],rows=1, cols=2)

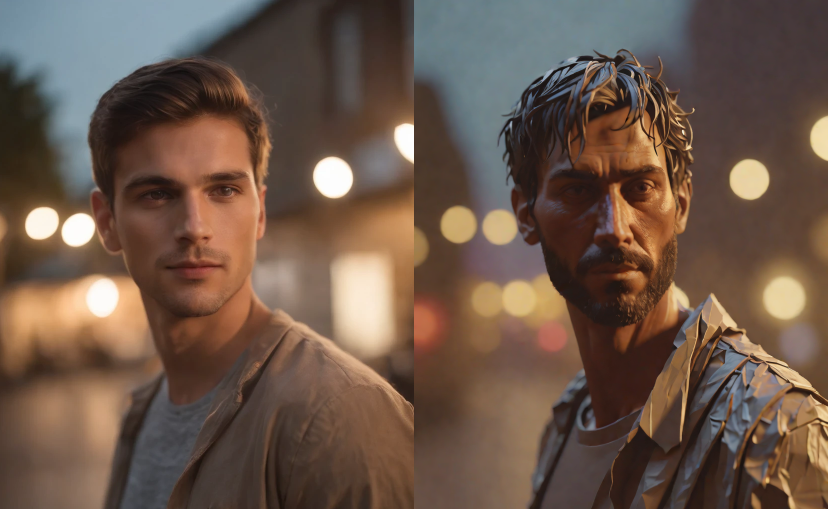

As you can see, SDXL Turbo has altered the input image slightly and generated an image with an old man as per the prompt.

The important parameters here are guidance_scale, strength, and generator. I suggest you start with guidance_scale = 0 and strength = 0.1 and play around with generator or seed value.

Once you find the seed value for which you get the desired generated images, then you can change the values of guidance_scale and strength parameters.

Let’s try out a few more prompts.

prompt = """

Portrait photo of muscular bearded guy,

((light bokeh)), intricate, elegant,

soft lighting, vibrant colors

"""

results = pipe(

prompt=prompt,

height=768,

width=512,

image=ref_image,

num_inference_steps=7,

guidance_scale=3,

strength=0.5,

generator=torch.manual_seed(40183)

)

make_image_grid([ref_image, results.images[0]],rows=1, cols=2)

prompt = """

a man made of ral-paperstreamer, very detailed, haze lighting, 4k, uhd, masterpiece

"""

results = pipe(

prompt=prompt,

height=768,

width=512,

image=ref_image,

num_inference_steps=7,

guidance_scale=3,

strength=0.6,

generator=torch.manual_seed(40183)

)

make_image_grid([ref_image, results.images[0]],rows=1, cols=2)